By Tech Dr Alex Jonsson

How to sample high-resolution biometric data, use low-powered, long-range networks (LPWAN) and still achieve high-quality results while powered by trickle-feed battery power alone? By using Edge AI (aka tinyML) is the answer, so here's how Imagimob implemented an unsupervised training model, namely a GMM solution (Gaussian Mixture Model) in order to do just that!

Introduction

Within the AFarCloud project [1], Imagimob has since two years had the privilege to work with some of the most prominent organisations and kick-ass scientists within precision agriculture; Universidad Politécnica de Madrid, Division of Intelligent Future Technologies at MDH, Charles University in Prague and RISE Research Institutes of Sweden, to create an end-to-end service for real-time monitoring the heath and well-being of cattle.

Indoors, foremost for milking-giving bovine, there are today methods commercially available which utilise combinations of multisensory (camera), radio beacons and specialist equipment like ruminal probes, allowing farmers to keep their livestock mostly indoors under continuous surveillance. While in many European countries, much of the beef cattle are held in outside environments, often large pastures spanning over hundreds or thousands of hectares, making short-range technologies requiring high data rates and networking range challenging for aggregating sensor data, as access to power lines and electrical outlets is scarce, and most equipment are battery-powered only.

With a small form factor worn around the neck, a sealed enclosure crams all of a 32-bit microcomputer, 9-axis movement sensors from Bosch sampling at full 50Hz speed, a long-range radio and a 40-gram Tesla-style battery cell. The magic comes in when all of the analysis is performed on the very same microcomputer then and there at the edge - literally on-cow AI - rather than the traditional transfer of raw data to a cloud for processing and storage. Every hour, a small data set containing a refined result, is sent over the airwaves, allowing the farmer to see how much of the animal's time has gone into feeding, ruminating, resting, romping around et cetera, in the form of an activity pie chart. By shrivelling many animals, the individual bovine can be compared both to its peers, as well as activity over time. Periods of being in heat (fertility), are important for the farmer to monitor as cows only give milk after becoming pregnant [2]. Cows in heat are amongst other things found more restless and alert, rather standing when the rest of the herd are laying down resting, fondle one another and produce frequent bellowing.

Training & AI models

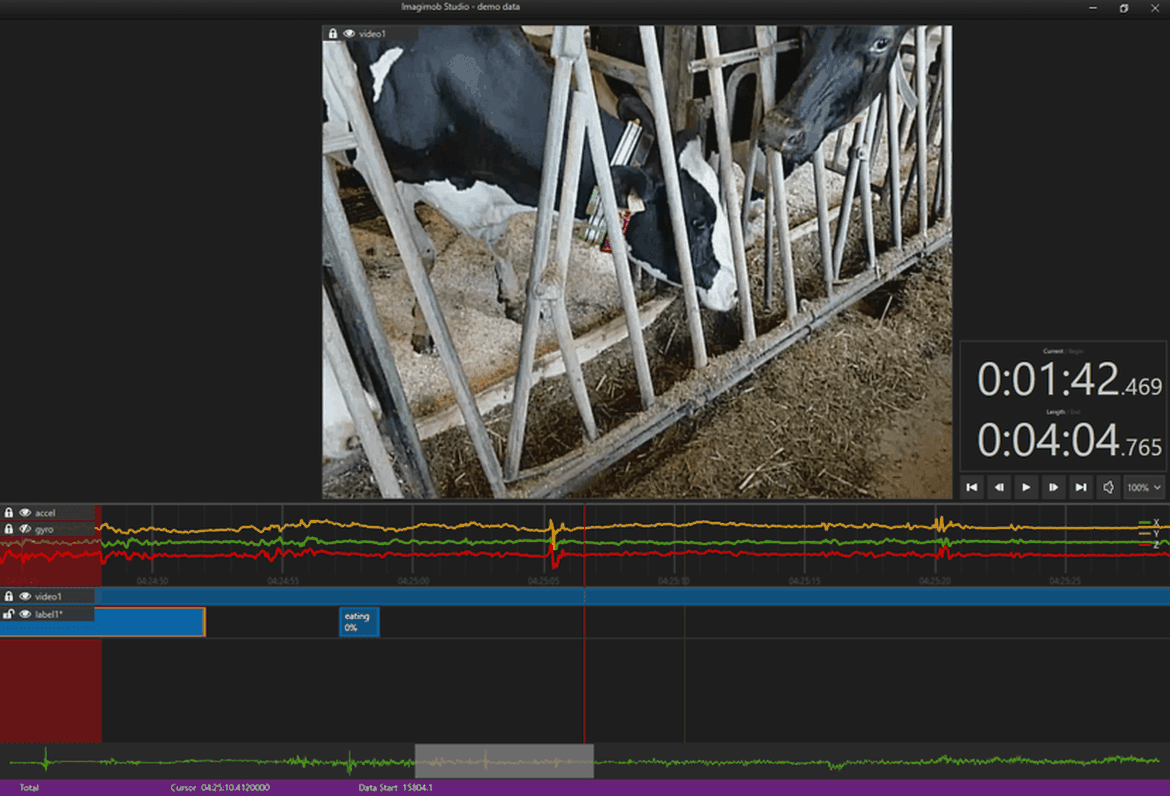

As we're using Imagimob AI SaaS and desktop software inhouse too, where a lot of the heavy lifting is taken care of once you have a H5 file (Hierarchical Data Format, with multi-dimensional scientific data sets). The tricky part is to gather data from the field. For this purpose, another device is used which basically contains the very same movement sensors, e.g. the Bosch BMA 280, 456 or 490L chip, an SD card, a clock chip and a battery allowing weeks of continuous measurements. Some of the time, cows were videotaped, allowing us to line-up sensor readings and the goings-in in the meadows. Using the Imagimob capture system, activities are labelled up for the training phase.

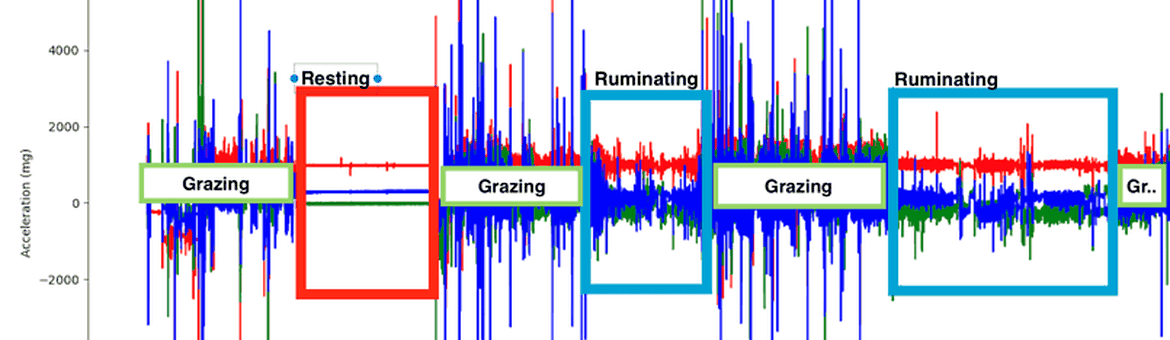

Picture: Cow activity timeline in Imagimob Studio software

After labelling, which can be done in real-time or during post-capture using the video track as reference, the HDF files can be formed and sent for model training. The good part is that you don't need 50M+ records to train some of models. The ever-popular Gaussian Mixture model is the saviour of many IoT projects as the lack of training data is a common show-stopper. Here, the assumption is that occurring events can be seen as a combination of different distributions and by creating an mathematical representation with a satisfying fit, the system can quickly identify what's considered normal behaviour and when events divert -- without actually knowing what the scenario is at all, or in what way a state of change will disrupt the current normal state. There are other models we commonly use for our Edge AI and TinyML applications, but for cows being notoriously hard to command, we can't ask them to e.g. trip over 500 times in a row like in another context where our researchers are aggregating data for a wrist-worn fall-detection device for the elderly.

The last part into deploying the solution, going from a h5 file to a runnable c code binary, is highly automated and part of the services Imagimob also provide for customers, allowing them to enjoy unprecedented turnaround time between training/deployment cycles. Normally, this could take hours or days and is sped up considerably with no programming on our customers' part. Pretty neat actually, and has saved us a lot of time in this project alone.

Picture: How sensor data can be classified along the timeline

Conclusions & next steps

We’re at the end of the second year for this three-year project, and we've already seen great interest in the obvious savings in regards to time and resources spent in each iteration to create the Edge AI software modules, and even more so in the ability to exploit LPWAN networking like NB-IoT (cellular narrowband), LoRA, 6loPAN or Sigfox which trickle along with anywhere from tens of bytes per second up to about 5kbit/s for real-world agricultural applications.

For us, the journey continues, adding also the classification of vehicles, not so much the autonomous tractors used in cutting edge precision farming, but more for the rest of us with all sorts of legacy vehicles. It's easy to learn where they are, as GPS are $20 with a SIM card holder off of eBay, but how would you know from a fleet-management perspective what do they actually all do? How much idle time does a tractor have during a working week, how often would it need maintenance given the current workload? With low-cost equipment and miles of radio coverage radius, using standard components (and some clever Edge AI) any mobile asset could be monitored over time, in an economically sane fashion.

We're also looking into the mid-range radio spectrum for use within precision agricultural applications, where long-range Bluetooth (v5) technology is picking up in a wider sense. Bluetooth radio has been proven very useful in the <30ft range, being both robust and fairly secure, but range limitation has been lacking. Preliminary tests with Nordic Semiconductor nRF52840 SOC show that 1000+ft range isn't out of reach anymore [3], and we'd like to see how e.g. positioning applications sans GPS would pan out, given that we add some tinyML to the mix!

References

[1] AFarCloud website; https://www.afarcloud.eu

[2] Sherwood, L., Klandorf, H. and Yancey, P. 2013. Animal Physiology - from Genes to Organisms. 2nd eds. Belmont: Cengage Learning.

[3] Long-range tested by Nordic Semiconductor, https://blog.nordicsemi.com/getconnected/tested-by-nordic-bluetooth-long-range