Accidents caused by people falling down is a true menace to society. It is also a common trigger for why working professionals as well as elderly people suffer long-term illness, subsequent loss of bodily functions, decreased sense of mobility and independence or at worst even death. By detecting actual falls, as well as predisposed behavior characteristic to potential risk of falling, is considered vital to reduce complications and improve quality of life. Elderly with a history of stroke are considered to be extra prone towards falling in their home and other daily life situations.

The Swedish social welfare board have disseminated in their findings (May 2022) that over 100 000 persons of 65 years or older do fall with the severity that they need to obtain professional healthcare. About 2 000 elderly also die as a direct or indirect consequence of falling each year. In general, about 7 out of 10 who experience a serious fall overall are over 65 years old. [https://www.socialstyrelsen.se/kunskapsstod-och-regler/omraden/aldre/fallolyckor/, Oct 2, 2022].

To fall down in this context means something along the lines of “unintentionally coming to ground, or some lower level” as coined by the famous Kellog working group on fall prevention back in the late 80-ies. There are many ways how a fall can happen, depending on the situation at hand, and we’ll soon find out just what it takes to be more specific about it. Professionals working physically with heavy equipment, at great heights and at the proximity of industrial processes like forestry, process industry and transport, statistically belong to fall-prone industry sectors.

In fact, for forest workers in general falling is among the most common cause of injury and death second only to losing control of a vehicle. Falling, rockslides and explosions are also runners-up for the most common cause of death in the workplace in general according to Swedish national statistics. Lone workers in the forest, alongside major roads and in confined spaces are also groups where falling accidents has proven to take its toll, while being in situations where contacting others for help can be difficult. [ Zecevic et al. Defining a fall and reasons for falling: Comparisons among the views of seniors, health care providers, and the research literature. Gerontologist. 2006;46:367–376]

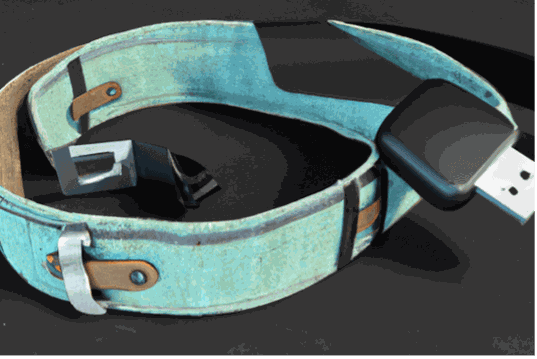

This write-up aims to focus on how to detect when someone falls down, and cover for the main corresponding strategies at hand. In the early days, it was much about wearing special gadgets on the person, initially quite bulky with various wires attached. They would basically detect tilting motions and general orientation of the body part where worn; typically, a waist belt equipped with a mercury switch which closed the circuit when the switch enclosure becomes oriented horizontally, the conductive liquid bridging the gap between the two connections.

Back in the day, measuring forces (acceleration) was more of a tilt-sensor sensor threshold type, made up of a spring-loaded rod with a metal ring around it at a given distance, which triggered an alarm when the rod and ring touched as linear or angular motion became vigorous, something that can be seen in electronic wake-up circuits even to this day.

As sensors became more sophisticated, it became possible to very accurately measure the force applied to a body in relation to its resting frame. Today, micro electro-mechanical systems (MEMS) are often used with a small cantilever rod in an often gas-filled enclosure, and with three sensors in combination, three perpendicular directions can be measured with high accuracy in a form factor less than one millimeter thick.

There are basically three main strategy lines when it comes to detecting falling persons, and combinations thereof;

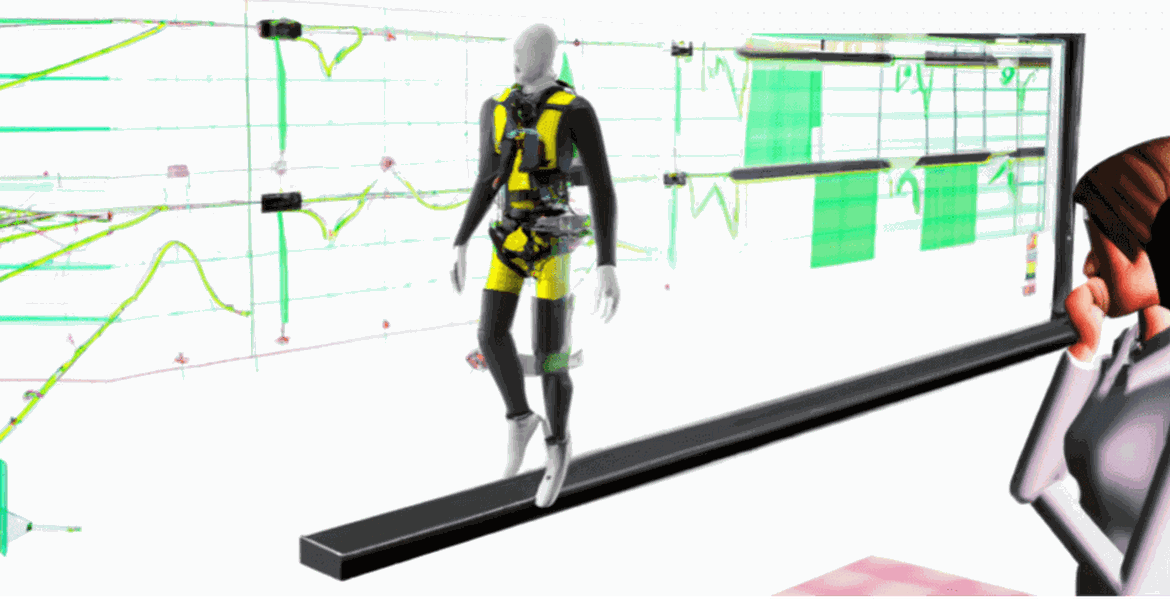

Imagimob has worked with room-oriented and individual-oriented but this article focuses on the latter. We have primarily specialized in strategies involving wearables for fall detection, and our customers and partners have used the Imagimob AI studio software in several successful use-cases, which all will be presented in further detail in this article series on fall detection. The technology as such is general to what kind of sensor data is presented as input, while the sheer speed and computational power of our neural network model – in combination with a small computational footprint makes our Edge AI system especially suitable for sensor-near calculations and on-device analytics of a small form-factor device, worn on a the person as a watch, jewelry, bracelet or embedded in garments or head-gear.

Once the sensor part is defined and established, the data needs to be collected and analyzed. There are both false positives and negatives to deal with; where either an actual fall isn’t detected or movement in general is falsely detected as someone was indeed falling, either of which creates uncertainty and distrust in the system over time. These four cases are estimated in two concepts of sensitivity and specificity. The former being the ability to detect a fall when someone has fallen down, and the latter the test’s performance of avoid detecting a fall when someone was going about their business and not falling. They are defined according to the following in 1947 by an American biostatician Jacob Yerushalmy:

Sensitivity = number of true falls / (true falls + false negatives)

Specificity = number of non-falls / (number of non-falls + false positives)

If both these values are in the high 98-99 percentages, the model could be considered to have usefulness in that specific area of usage. Naturally, someone motionless after a fall, while not being detected as such is worse than a false alarm after a stumble, while the math needs to be weighted in to take such consideration.

Falling from a sleeping position (e.g. out of bed), from sitting as opposed to falling while standing, walking or running play out differently, and where recordings of the critical part of the fall with or without recovery need to be classified in separate ways. Hence the temporal aspects are important, where the actual event is very short (approx. 0.3–0.5s) yet the periods before (pre-fall) and after the fall (post-fall) event are instrumental to what kind of event should be triggered resulting from the event.

Most falls show a significant part with negative acceleration in the data, as well as the characteristic acceleration change from positive to negative values with subject’s de-acceleration when connecting with the lower level. The beginning of the fall is often characterized by a stage of weightlessness where acceleration in all three dimensions is near zero, to the impact where forces can exceed -2g or more. Necessary response times of a fall detection system vary; in some cases, analysis time needs to be very short when an airbag might need to deploy before impact, while in other cases more accuracy and temporal data are required in order to sound an alarm, for catching the attention of relatives or statical data for longitudinal monitoring.

The early threshold-based methods proved to take fall detection only so far, even when combined a fall couldn’t be classified properly and with prone both to false positives and negatives respectively, such as one study back in 2007 where a fall could have occurred when:

[Noury et al, Fall detection – Principles and Methods, Proceedings of the 29th Annual International Conference of the IEEE EMBS, 2007]

It’s clear however, that just looking at the vector isn’t sufficient for an accurate detection as common jumping also generates a sudden change of this. Therefore, monitoring the activity that follows can be used; if the maxima is followed by someone standing straight up directly, then it is not a fall.

Also rule-based systems, relying of sensor-near calculations of the mean values over a given interval, standard deviation, sum of vector magnitudes, and tilt angle(s) fall short when it comes to actually classifying and distinguishing between different kinds of falls; forwards, backwards, sideways, from climbing, tripping when running or walking, and especially for workers who are involved in physical activities where vigorous movement is involved also without any actual falling. It is only with the introduction of machine learning techniques to the field of fall detection that the sensitivity of analytics results is accurate enough to be useful enough and eliminate the false positives and equally important the false negatives, where a fall occurs without being recorded as such.

When it comes to the various experiments and deployments based at least in part on machine learning techniques, there are several which have been tried over the years. Some which rely on huge volumes of training data can be cumbersome to accommodate, as 50 million records of various people falling is not easy to come by.

Data in most cases needs to be labeled which also is a tedious task to carry out even with access to accurate multi-node movement data and video footage on a common timeline. A survey on models used, while stating it to be a non-exhaustive list of methods, was made based on both their respective usefulness as well as their popularity at the time [Pannurat et al, Automatic Fall Monitoring: A Review, Special Issue MPDI, 2019]:

The overlap between fall accidents and activities in daily live (ADL) has been the object of many studies and here access to well-labeled volumes of training data, specifics of the context at hand and systems that over time learn about the subject at hand are instrumental to obtaining the best results.

Analyzing relationships between falls and resulting injuries, as well as patterns and long-term trends of fall conditions, can potentially be useful for establishing efficient fall prevention strategies.

Using the Imagimob AI platform, capturing data for training and testing is made easy, purposely closing the loop between iterations between development of models. With very little training, pretty much any regular staff member can become involved in the data collection phase and as the system outputs executable binary libraries, they can be dropped in as part of existing embedded software as they are based on standard libraries without external dependencies.

The process from inputting training data to having a running binary is highly automated/streamlined and reduces lead-time dramatically. The Imagimob platform is focused on the development of neural networks for edge devices.

Two of our fall detection customer projects; the Bellpal fall detection watch and the Alert Safety Vest (developed by Scania, and now operated by Swanholm Technology) are two use-cases where both a small form-factor and a short development time were important success factors.

We have developed a fall detection application that runs on the Syntiant NDP120 AI-chip, learn more here.

We have also developed a room-oriented fall detection system based on radar sensors together with Texas Instruments.

Stay tuned for more blogs on the topic of fall detection.