The fact that the number of IoT devices is expected to jump from 26.66 billion in 2019 to 75 billion in 2025 has driven many product developers to take the leap beyond the cloud to the edge. However, for many, the work involved in actually getting an Edge AI development project off the ground is enough to stop them in their tracks—or at least generate months of frustrating delays.

Thankfully, a new and better development path has already been forged. If you want to make the most out of your next Edge AI project, it’s time to abandon the old method and embrace the new.

The old way of doing Edge AI

The typical Edge AI development process entails a constant struggle. First, you need to get hold of a vast amount of the right kind of data. Once captured, so begins the tedious and time-consuming task of manually labeling and organizing it. Then comes the verification stage, which typically involves generating statistics from large datasets—but even this does not reveal the whole picture. True performance is not evident until you perform integration testing. And if performance isn’t good enough for Edge deployment, a new project has to be set up involving Firmware and Machine Learning Engineers.

Step 1: Data capture

Collect raw time-series data from several devices, struggle with synchronization problems between devices, then build custom solutions for organizing data and metadata collection.

Step 2: Data labeling

Label vast amounts of captured data, either by hand or by writing custom scripts with simple rules. Then struggle with trying to label raw data without proper visualization.

Step 3: Verification

Generate statistics from large datasets and hope that you measured the right things. Manually go through data samples to look at edge cases.

Step 4: Edge optimization and deployment

Set up a separate optimization project involving Firmware and Machine Learning Engineers. Wait months for a first result.

The new way of doing Edge AI

The process of developing an Edge AI product does not have to be so costly and impractical or frustrating. We believe it can and should be a whole lot faster and more fun. Now, imagine the same development framework but with a lot less friction:

Step 1: Data capture

Capture perfectly synchronized data from multiple devices together with metadata, such as video and sound. This will help you in the next steps.

Step 2: Data labeling

Label only a subset of your data, then let your AI models label the rest as they learn and improve.

Labeling is much easier thanks to synchronized playback of time-series data and metadata.

Step 3: Verification

Automatically visualize all of your models and their predictions superimposed on top of one another. Now you’ll know not only what they predict, but when and with what level of confidence.

Step 4: Edge optimization and deployment

Happy with the accuracy of your AI application? Just press a button to optimize it for the Edge and get a final application that can be integrated in minutes.

How? The Imagimob AI Software Suite

Our software suite covers the entire development process—from data collection to the deployment of an application in an edge device. To make this possible, we’ve developed two products: Imagimob Capture and Imagimob Studio.

IMAGIMOB CAPTURE

For fast and painless data collection. For years, Imagimob AI Engineers have used this tool to collect data from battery-powered sensors in the field. Now, it’s available to you as a mobile app.

By supporting data collection over WiFi and Bluetooth, Imagimob Capture is the perfect tool for data collection from Edge/IoT devices. Imagimob Capture comprises a mobile app and several capture devices. It’s used to capture and label synchronized sensor data and videos (or other metadata) during the data-capture phase. Highly accurate, Imagimob Capture even works for collecting sensor data from high-speed sensors such as radars or very high-frequency accelerometers. The mobile app enables immediate labeling in the field. Once the data is ready, it’s sent to a dedicated cloud service for further processing. Simply start the app, connect to a sensor device using WiFi or Bluetooth, and press record.

What you can do with it:

• Connect to and record sensor data from any edge device

• Record data over WiFi or Bluetooth

• Record video and sound for visual reference

• Label data live using your phone or a remote control

• Generate time-synchronized data, videos, and labels

• Upload capture sessions to the Cloud

Results:

• Faster and more accurate data collection

• Significantly less development time

• A data stream of videos, sensor data, and labels—perfectly synched down to a millisecond

IMAGIMOB STUDIO

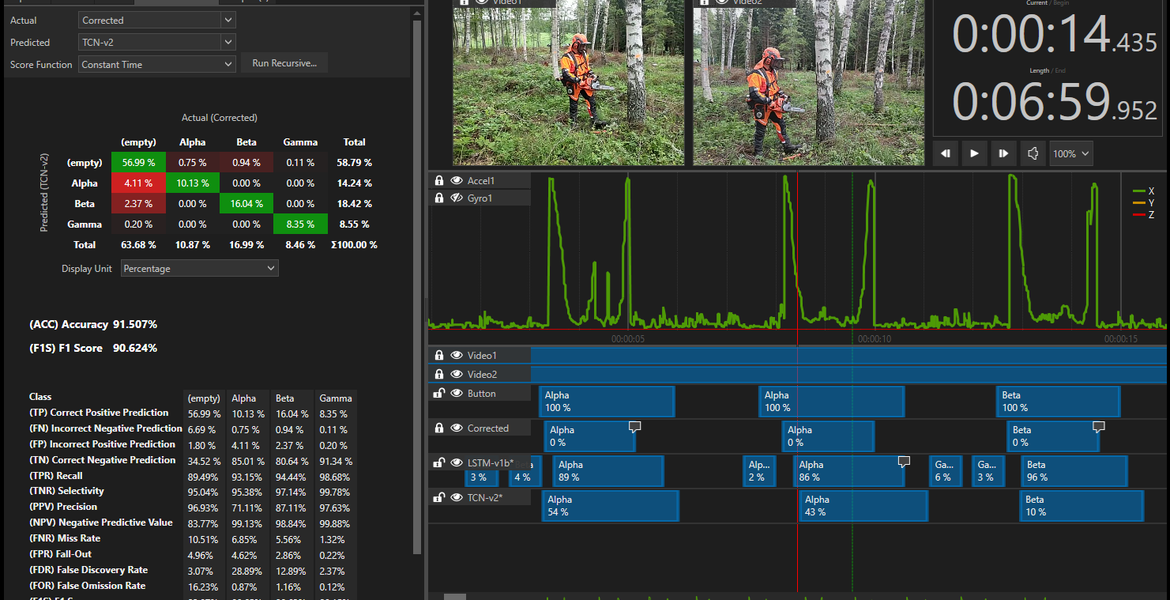

For faster development and better performance. Most AI development tools let you visualize a wide range of performance metrics. Very few let you visualize all of your data, all of your predictions, plus the confidence levels of every one of your models—all on a single timeline.

Imagimob Studio speeds up the entire process of building an AI application. Import and organize your data. Label part of your data, then let your AI models label the rest— down to the millisecond level. Build new AI models or import existing ones. Then get direct feedback on their performance in parallel. Imagimob Studio lets you see, hear, and understand how your AI models are performing at every step in time. Quickly identify and correct errors, and visualize the improved results in an instant. Happy with what you see? Package it for your Edge platform with the press of a button.

What you can do with it:

• Build Edge AI applications for time-series/sensor data input

• Access all of your data in one place

• Automatically split and manage different data sets

• Efficiently label all or parts of your data

• Generate new AI models or import models built with other tools (TensorFlow, Caffe, etc.)

• Output optimized C models for Edge AI applications (battery-powered hardware, etc.)

• Evaluate the performance of all your models in parallel

• Visualize the predictions of all models in real-time on top of the input data

• Visualize the confidence of predictions

• Display all the standard metrics (Confusion matrices, F1 Score, Recall, Prediction, etc.)

• Import/Export AI models to/from other tools

Results:

• Faster overall development time

• Significantly less labeling time

• Faster performance verification

• Lower power use

• Reduced bandwidth

• Higher speeds

• Understand how your AI models are performing out in the real world at all times

• More time to direct your efforts where they matter most

What can Imagimob do for your next Edge AI project?

Sign up for a Free Trial of Imagimob AI here.