If you want to successfully solve a problem using machine learning, the foundation of your work should not be a set of fancy algorithms. Rather, your first step should be the collection of high quality data. After all, data is what the machine learning model will learn from. And if the data is incorrect or bad, or inaccurately labelled, there’s no fancy algorithm in the world that can save you.

We know this at Imagimob because we’ve tried and failed plenty of times before finally cracking the formula for successful AI projects. And, according to our learnings, the first set of priorities for anyone looking to solve a problem using machine learning should be figuring out what data to collect and what tools to use to collect it.

Let's start with the tools

When we first started running AI projects, we had no tools. So we built some extremely crude ones. At Imagimob, we build machine learning models that act on time-series information. Time-series information, or data, is basically made up of signals or events happening over time, like signals from sensors, an engine, or a radar.

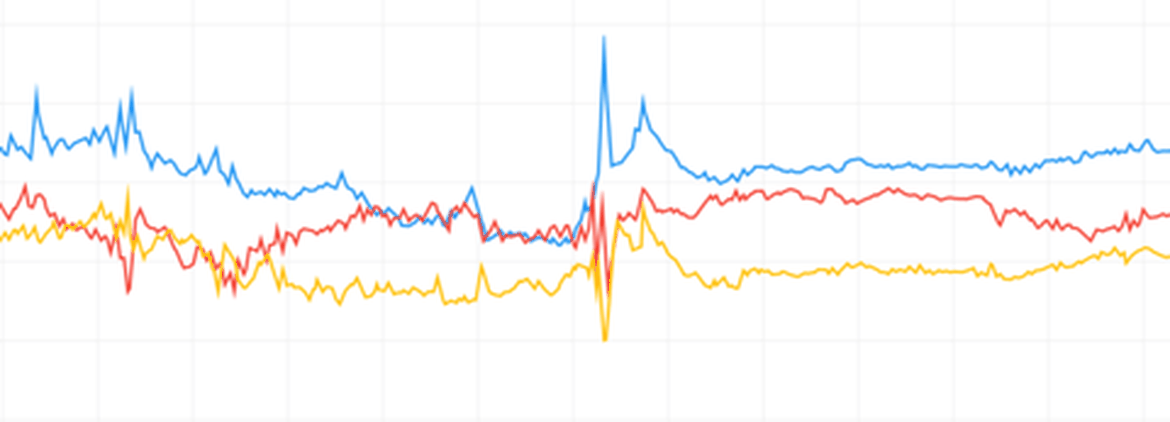

So, we built tools to collect and store this kind of data in a very efficient manner only to find out that humans are not very good at spotting patterns in this kind of data. It's very difficult to understand what raw sensor data actually mean, as you can see in the picture below. So now, we had a lot of high quality data and no way of accurately labelling it.

This meant that the machine learning model had the right data, but with the wrong annotation. Hence the model was being deceived, by design. What we learned the hard way was that, in our branch of machine learning, we need to collect a lot of meta data. And not for the algorithms, but to enable us humans to teach the algorithms.

So we developed data collection tools that can collect video and audio together with the time-series data, and even annotate the data on the fly. This means that we can start labelling on the spot. Additionally, if we need to collect data over the course of days or months, we now have the ability to go through the data as if we're there in real-time, with the ability to freeze time, rewind, and get those labels just right.

But even with the perfect tools, you need to know what data to collect

Here, it becomes interesting. Of course, what data you should collect highly depends on your specific problem, and machine learning can be applied to so many areas that it's difficult to generalize. But, in any case, we’ve got some derived wisdom to share with you: Measure performance from the end-user perspective.

In some cases, the "real" world differs from the academic world and machine learning academia is a good example. In machine learning academia, accurate numbers are everything.You "win" if you create a machine learning model that solves a problem with the highest degree of accuracy, regardless of whether or not the collected data is representative of a real world scenario, or if an accuracy number even makes sense as a measurement in that scenario.

Here’s an example:

Let's say that you are designing a machine learning model that detects whether or not a person is falling by reading the motion sensor information from a sensor attached to their body. Let's say that you’re measuringt he performance of your solution in the standard academic way. Your measurement might look like this:

Correctly detecting a fall, when an actual fall has occurred: 95% accuracy

Correctly detecting a fall did not occur, when an actual fall did not occur: 99.5% accuracy

These numbers look impressive—95% and 99.5%! Almost unheard of. Too bad it doesn't mean anything in the real world.

What you should have done, is collect data from real people for extended periods of time. That way, you can translate the second piece of information into another measurement, the number of false triggers per user per day. By doing so, you would see 99.5% accuracy translated into several false triggers per day and a product that is, quite frankly, not good enough for the market.

The most important tip we can offer for your next AI project is to collect this kind of data and measurements as early as possible. Not only will this result in a better product. It will also save you time. A lot of time.

And, of course, the best way to collect this data is using Imagimob Capture, our tool for data collection. Contact us to learn more.

Alexander Samuelsson, CTO and Co-Founder

About the video below: In a project with Husqvarna Group we are doing data collection together with professional foresters.