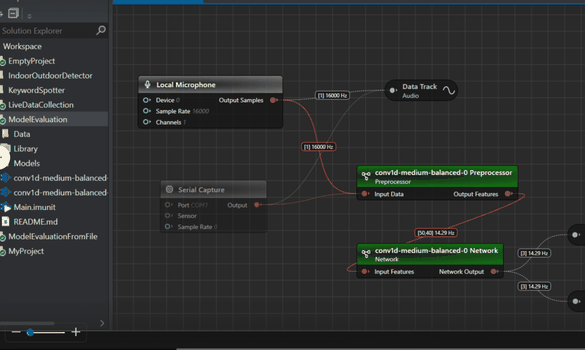

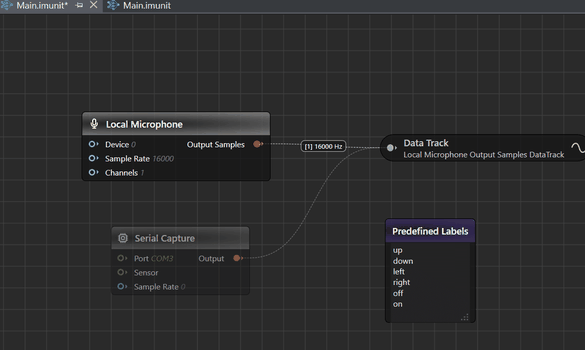

Graph UX is an intuitive interface to visualize the end-to-end ML workflow as graphs. This graph ML framework is designed to provide a clear understanding of the modeling workflow from building to evaluating machine learning models.

Modern day ML is all about neural networks, which are easily represented as graphs. This analogy works throughout the machine learning development process, from data collection, model building, model training, all the way to model evaluation and deployment on device. Putting these parts together into one coherent view makes it easier to design your own Edge AI models.

Read more about Graph UX and learn how to use it.

1. Collect and annotate high quality data

2. Manage, analyze and process your data

3. Build great models without being an ML expert

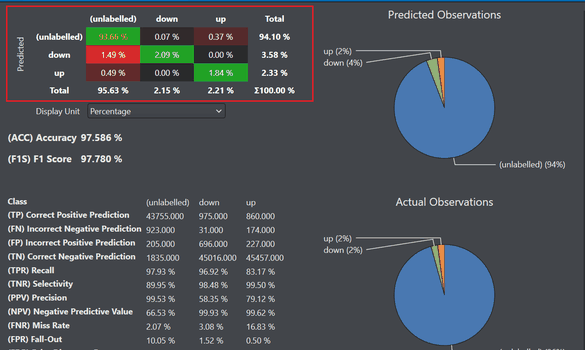

4. Evaluate, verify and select the best models

5. Quickly deploy your models on your target hardware

Predictive maintenance - Recognize machine state, detect machine anomalies and act in milliseconds, on device.

Audio applications - Classify sound events, spot keywords, and recognize your sound environment.

Gesture recognition - Detect hand gestures using low-power radars, capacitive touch sensors or accelerometers

Signal classification - Recognize repeatable signal patterns from any sensor

Fall detection - Fall detection using IMUs or a single accelerometer

Material detection - Real time material detection using low-power radars

Without any data ever leaving the device without your permission.

Are you tired of the mundane process of collecting data from your embedded devices and sensors, spending days fiddling with how to capture the data with good quality, and then spending even more time annotating and cleaning all of that data?

With DEEPCRAFT™ Studio you get several tools for collecting high quality data straight from any hardware or sensor, either wirelessly or by cable. Capture data with your phone out in the field or straight to any computer or platform running Python. Once the data is collected, DEEPCRAFT™ Studio verifies that all your data is consistent and error free.

Our experience shows that ~80% of the time spent in a successful AI project is spent collecting, annotating, cleaning, processing and experimenting with different data sets. And a lot of engineers find this to be the most frustrating part of the whole process

With DEEPCRAFT™ Studio, once your data is collected, it can easily and efficiently be annotated by dragging out labels on top of it. Labels can then be copied, resized and modified in seconds. To further automate the process we also provide scripts that can run through and annotate data for you according to your set criteria, automatically.

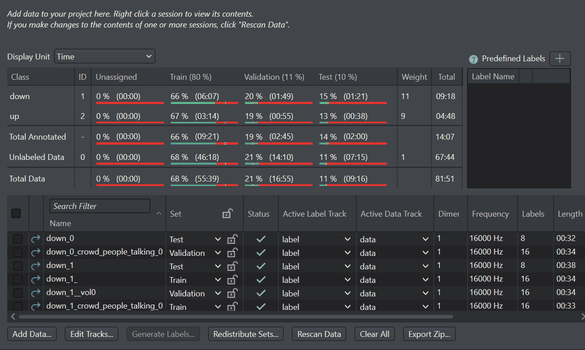

Once data is collected, it must be presented to the model in the right way for the model to "learn" i.e. tune its trainable parameters to match the training data - without overfitting, so that it also performs excellent on data it has never encountered before.

Imagimob Studio has a well designed, easy-to-use workflow for managing all collected data into different datasets to get you lined up for model building and training. Are you an experienced user? Then you might wonder if you can control exactly which samples goes to which dataset? Well, of course you can!

Even the most experienced machine learning engineers make costly mistakes during the preparation of data for model building. These errors includes mislabeled data, inconsistent data frequency, or mixing data of different dimensionality. Like stereo and mono audio files when building a sound event detector. These mistakes can cause really poor model performance while taking days to detect and fix.

In our workflow theses errors are instantly located and easily tracked down.

Anurkash, Software Engineer

Timotej, Software Engineer at Inovasense

User of DEEPCRAFT™ Studio, Software Engineer

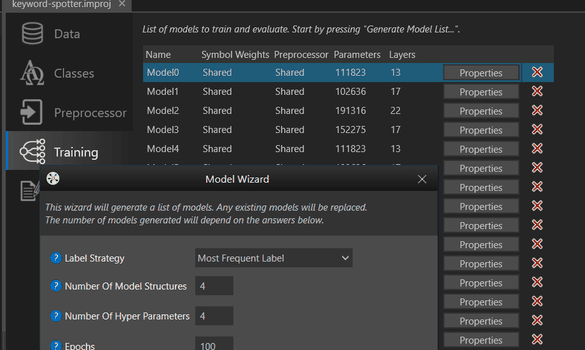

A great machine learning engineer knows the importance of running quick experiments. What this means is, training different models, evaluate the results, adjust accordingly and do it again. This used to be a tedious process involving a lot of intuition gained from thousands of previous experiments, plus the privilege of having access to massive, raw, compute power. The winners are those who have access to the best engineers and the most compute power.

With DEEPCRAFT™ Studio’s AutoML functionality you get the intuition from our experience ML engineers built-in. It generates high performance AI models according to your data, automatically, and these models are optimized for speed and low footprint before deployment. Even better, your generated models are fully transparent, so you can view, edit, or delete them however you like.

Once your models are generated, training is started in our cloud at a click of a button. At this stage you will want to get the results as quickly as possible so that you can evaluate the results and adjust accordingly.

This is achieved by training your models on high performance training hardware, but there's one thing that really sets us apart - DEEPCRAFT™ Studio always trains several of your models in parallel, minimum four at once, instantly giving you a 400% performance increase compared to training one model at a time.

Normally, when building AI models for embedded devices you would have to deploy them on the device so that live testing can be performed. This is time consuming and frustrating. Especially early on in a project where new data is collected and new models are built at a rapid pace.

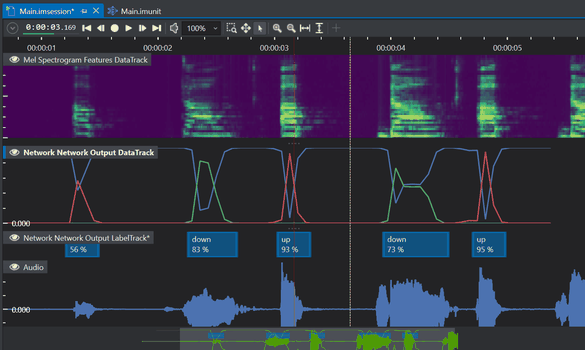

DEEPCRAFT™ Studio solves this problem by outputting the model predictions on the same time-line as your data. This allows you to see exactly how the AI model interpret all your data, in real time. You can even play it back. This gives you a clear picture of how the AI model will perform live out in the field, before even deploying it. The biggest benefit of this is that you can wait with deployment and live tests until you have a good model. Saving a lot of time.

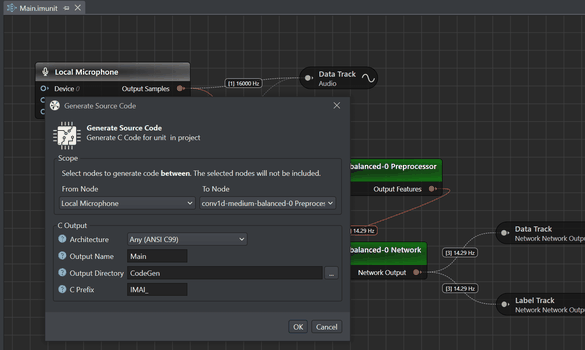

Packaging an AI model for an embedded device can take months. A firmware engineer needs to port the model to an Edge AI framework. Most of the time only the model and not the data preprocessing will be automatically translated. Once the model is translated it requires testing and optimization, requiring expert knowledge.

With DEEPCRAFT™ Studio, this step is done with the click of a button. In just a matter of seconds your AI model is optimized, verified and packaged.

When clicking on "Build Edge", a simple API is created and the model is ready to be deployed on the embedded device. DEEPCRAFT™ models are self-contained, highly optimized C code and can therefore be deployed on almost any platform in the world.

DEEPCRAFT™ Studio is built by a team of engineers, creators and researchers with one goal in mind. Helping you to create the best possible AI applications for small devices. That is why we have built an end-to-end solution, optimizing for edge devices from the data collection, through the model building and verification, to the final deploy.

We clocked it. Without DEEPCRAFT™ Studio it took 10 weeks to collect a dataset and build and deploy an AI model on an edge device. With DEEPCRAFT™ Studio it took one week to reach the same level of performance.

Learn the details about DEEPCRAFT™ Studio in this video.